🔎 Focus: Index Clean Up

🔴 Impact: High

🔴 Difficulty: High

Dear Tech SEO 👋

Nothing hurts more than a sudden drop of traffic…

Well, actually, one thing hurts more than that: 40% drop of revenue…

Worst thing about traffic drops…

You just do not know where they come from. Barely impossible to avoid. You just can “prevent“ them after they happened…

Spam label?

Core update?

Manual penalty?

Technical issues?

Last cases we are working on come from migrations/deployments.

You changed CMS, migrated to a new domain, changed the website theme… Maybe all at once.

The case I am going to share today was a theme update. Not even a new theme, just the same website theme with a few structural changes. Sounds innocent, but became this:

This sudden drop made the revenue drop around 40%

Case:

WooCommerce

Home & Garden

B2C, Retail

Ready? Let’s go!

Start At The “Pages“ GSC Report

I received an email that read something like this:

“We migrated the theme and lost most of the traffic. We have 1000s of issues on our Ahrefs report but have no idea where to start.“

Whenever someone reaches out we automatically open our Screaming Frog and crawl the hella out of the website. This crawl took a bit longer than expected which was already a clue of what was going on.

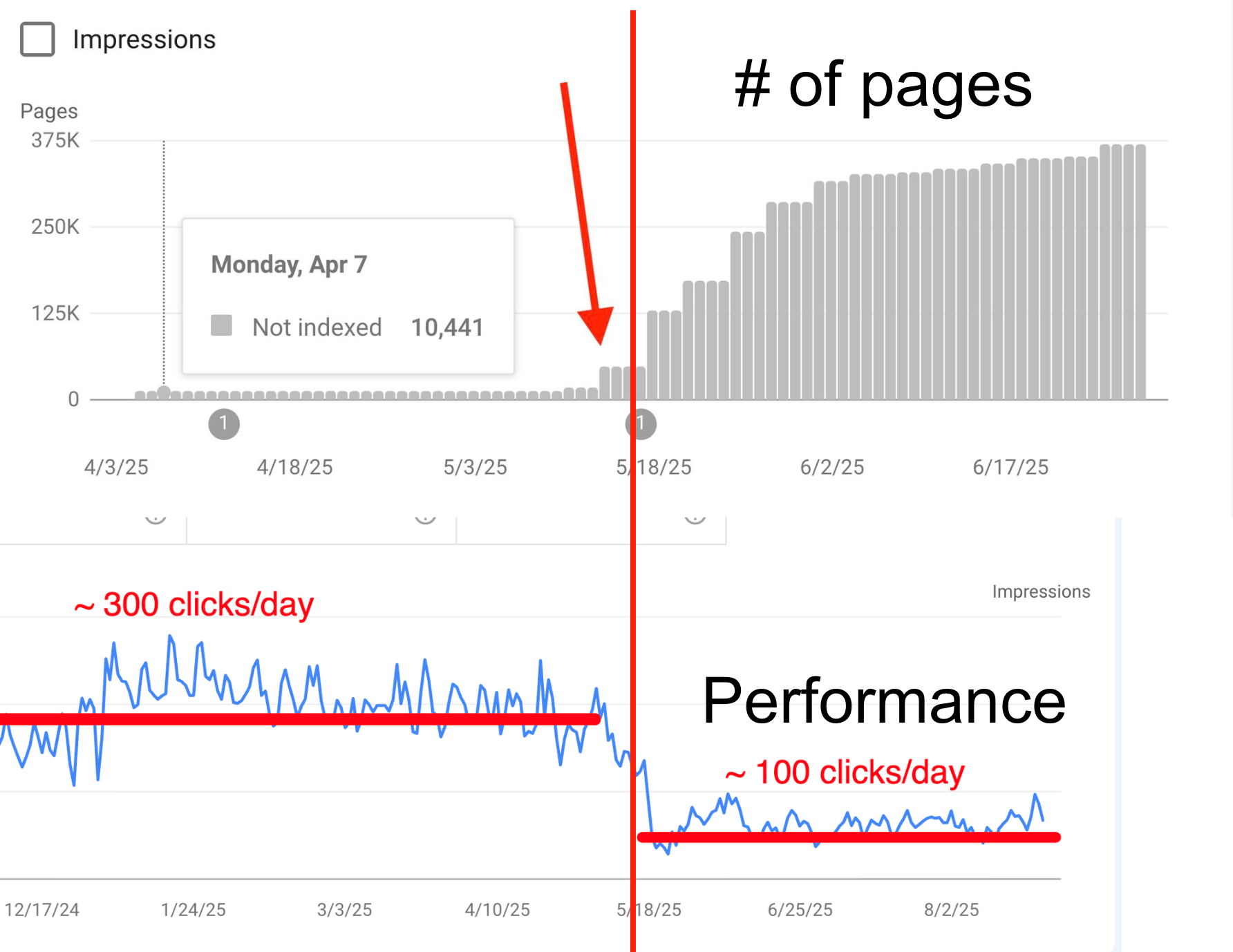

The website was no more than a few thousand products but this is what I encountered when I opened their Search Console:

Sudden exponential growth of pages can be considered spam

If we put it together with the performance report:

You’ll see the match # of pages vs search performance

Exponential growth on the amount of pages

impacted directly the search performance

It all happened around May ‘25. It looks like Google gets triggered easily but to put you in perspective

The website went from 10k pages with issues up to 60k pages within 4 days.

And it reached almost 500k pages in the “gray“ report for the Search Console.

Our only goal was to clean the website and deliver a clean index.

I teamed up with Patryk Wawok for this one.

Index Clean Up - 3 Simple Steps

While analyzing the Google Search Console reports, we found out that a significant portion of the excluded pages from the index are just pages with filtered results. They call it Faceted Navigation. You are definitely familiar with it:

Faceted navigation can be an issue for SEO if each filter option becomes a landing page

Also they had those typical links that show you results ORDERED_BY price, relevance, etc. All of them were adding thousands of thousands of new irrelevant pages in the crawl.

Every single filter combination was a landing page. I mean EVERY filter combination. Imagine 12 different filters with 4-5 options each filter. We could end up with +250k pages only with the filter combination pages. You multiply that with extra 4-5 ORDERED_BY options and you get yourself +1M irrelevant pages.

Each of these pages was canonicalized to the core page which was a small relief at least, as this signals the pages are not that relevant. Yet they kill the crawlability of the website and has to be fixed.

The filtering options were not indexed (wohooo!) which allowed us to implement this approach:

Remove the faceted navigation and filter links: we have to remove the source of the issue. All links pointing to filter pages were set to be removed. The filter functionality (fundamental for users) can be kept as Javascript function (click or form).

Block filter URLs from the crawl: Block filtered pages from crawling using the following directives in robots.txt.

Disallow: filter_Disallow: filters=Disallow: ordered_by=

Add a

[noindex follow]tag on each page with a filter URL combination to prevent future issues. This can be done because the pages were not indexed previously.Add self-referencing canonical to filter URLs to isolate them and avoid sending them to the core valid pages via canonical.

Cleaning the index grows SEO performance

Extra Fixes To Recover From The Traffic Drop

Internal linking suffered. Breadcrumbs disappeared and automated internal links between pages also were gone. The website barely had any hierarchy: bad for engines and bad for users.

We are working on 2 major fixes:

None of the pages had implemented a proper breadcrumb path. It was only 2 links:

Home > Collection Page

Home > Product Page

We are adding full paths on both HTML and Schema like:

Home > Parent Collection > Child > Long-Tail > Product Page

This creates a clear hierarchy and “shelfs“ all content which is then easier to crawl, index and serve to search engines.

Implement Automated Internal Links

Anything from related product, related collections, related posts enhance crawlability and discoverability of content.

You have to ensure those blocks are:

Dynamic based on each page (meaning the have to be difference based on the current content)

Rendered on the server

Automated

Results so far

+65% already recovered

+100% traffic growth from the drop

+350k pages removed

Other fixes we are working on:

- Removal and block of thousands of filter pages

- Remove JS rendering from crucial content

- Redirect of thousands of 404s

- Improve navigation on mobile

- Enhancing product schema

- Fixing breadcrumbs

- URL standarization

Reply to this email with “Technical SEO“ if you learned something new 🙂

Note: if you reply to this email it also helps emails systems to understand I’m legit and not spam.

Recommended Reads

🔎 How to Analyze Sudden Traffic Drops

[Despina Gavoyannis - Ahrefs’ Blog]

🔎 Structured Data For AI Search

[Jarno van Driel - Candour Podcast]

🔎 Hubspot’s Blog Traffic Drop

[Aleyda Solis]

🔎 Monitor Search Traffic Drops

[Google Search Central]

🔎 Do We Care About Traffic?

[Tory Gray - SEJ]

Until next time 👋

—